Image Super Resolution using a GAN with a Perceptual Loss Function

- This was my first ever introduction to Deep Learning and AI in general! I chose to work on this project to get myself acquainted with how SOTA networks can be used on a useful problem statement. I came across Ledig, et al. 2017 and was fascinated by how this could be used in numerous applications, say for potentially upsampling low resolution MRI scans instead of purchasing higher cost equipment.

- The model network proposed was a version of ResNet with 16 Residual Blocks, utilising skip connections, with high level feature maps being captured by a Perceptual Loss Function trained on the VGG network.

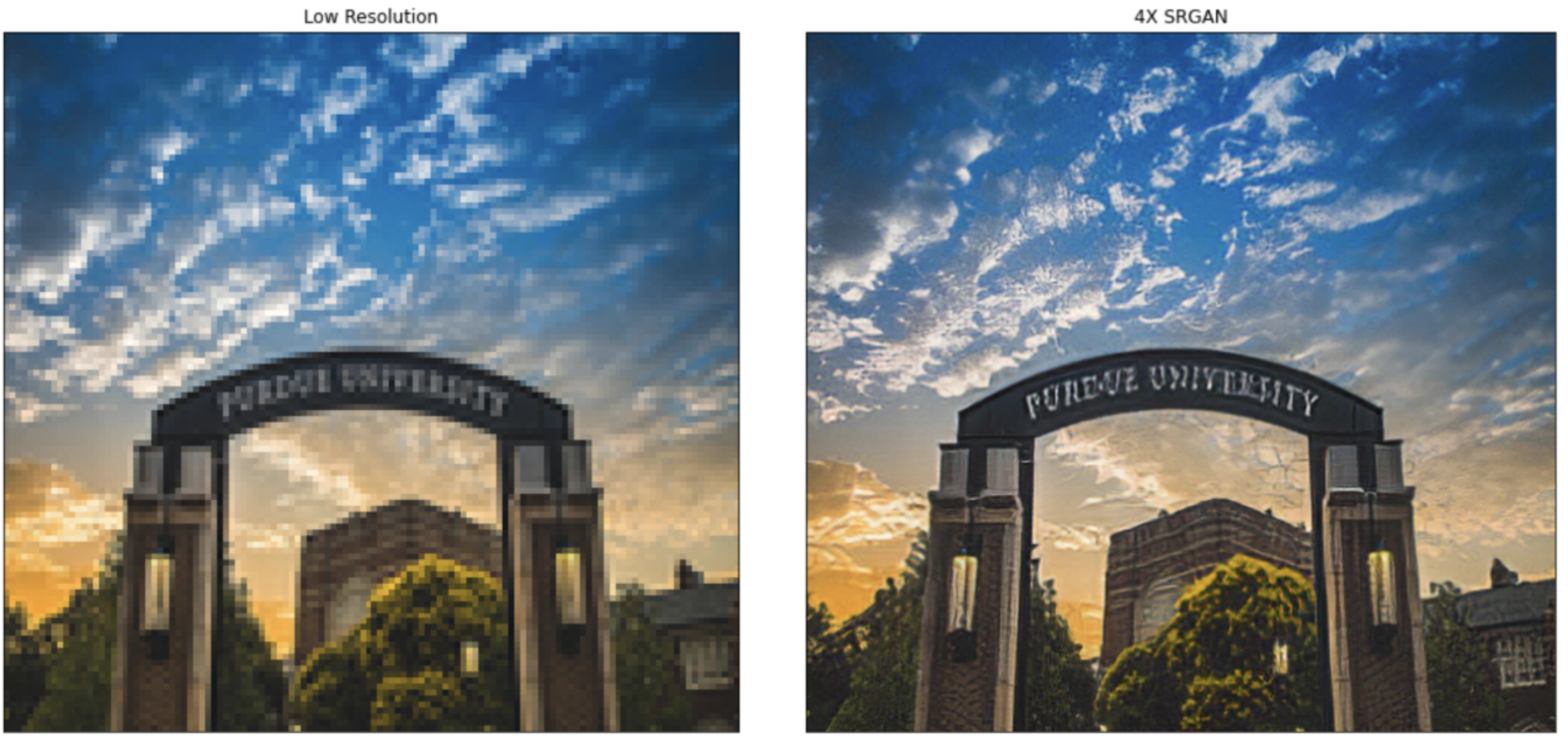

- The network was then trained for $50,000$ steps on the DIV2K data set, closely monitoring the Perceptual and Discriminator Loss trends over time, to make sure the $4\times$ upscaled images were as close as possible to the original. The results are shown in the picture above.

- This project was presented for my Graduate AI course taught by Prof. David Inoue, which sharpened my research writing skills, as the paper for this class went through a thorough literature review and multiple peer reviews, which culminated in the following presentation!

Here is the link to the code repository for this project SRGAN-tf2